Development

Viper Online Services

December 15, 2023

Knockout City requires reliable online services to do a wide range of things necessary for players to have enjoyable matches. That includes matchmaking, authentication, finding friends, reporting misbehaving users, delivering updates, tracking progress, etc.

Following the same philosophies and practices in building our online services as we did for the rest of the Viper led to some unique decisions in the space of network services, and, we think, some pretty good results!

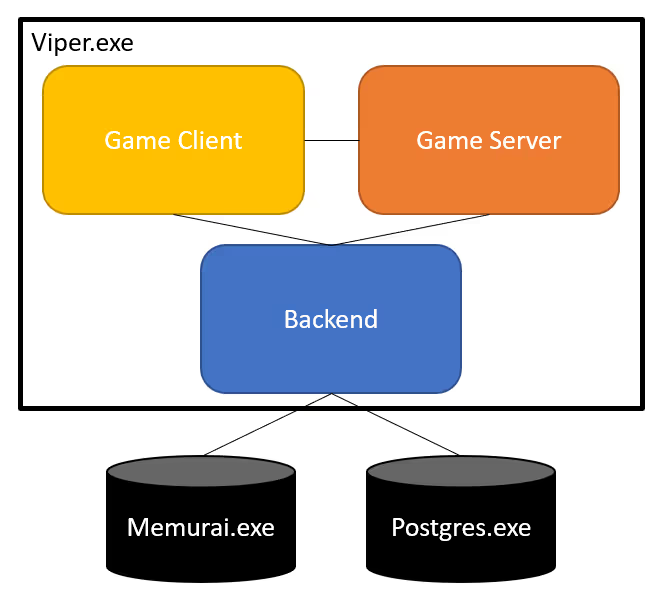

Notably, we use a fully unified code base. Backend services, game server, game client all live in a single source tree, all are written in a single language (C) sharing the same set of core libraries, and all can build into a single unified executable. This makes is much easier to build and deploy a change across the entire stack – game client, server, and services. Debugging is as simple as pressing F5 in Visual Studio and setting breakpoints.

We believe our approach helped our small team of 4 network programmers build a suite of network services totaling about 60k lines of code from the ground up to support Knockout City.

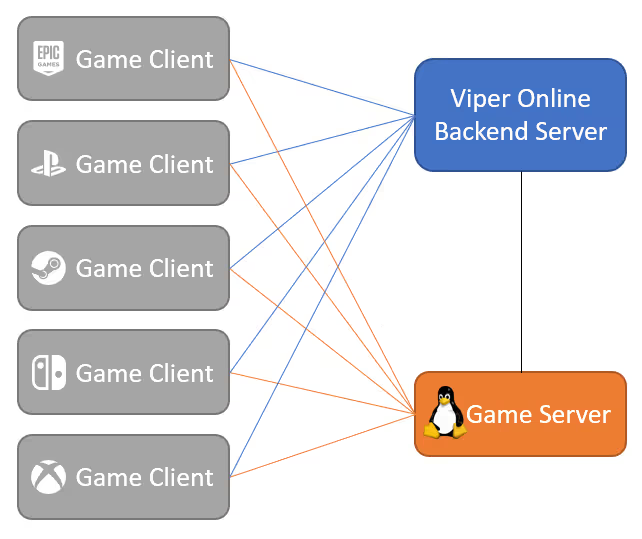

The General Flow of Information

When you launch Knockout City and sign in, the game makes an HTTP request to us to authenticate and then establishes a websocket connection for the duration of your play session. Everything about moment-to-moment gameplay, such as whether you timed that catch right or stopped short of dashing into that wall, happens between you and a game server, both running a simulation of the game world. Everything involving any persistent information or the greater state of the world, whether it’s who just threw a ball at you or which hats you own, is communicated over a websocket connection between you, your game server, and a Viper Online backend server.

A Tour of the Services

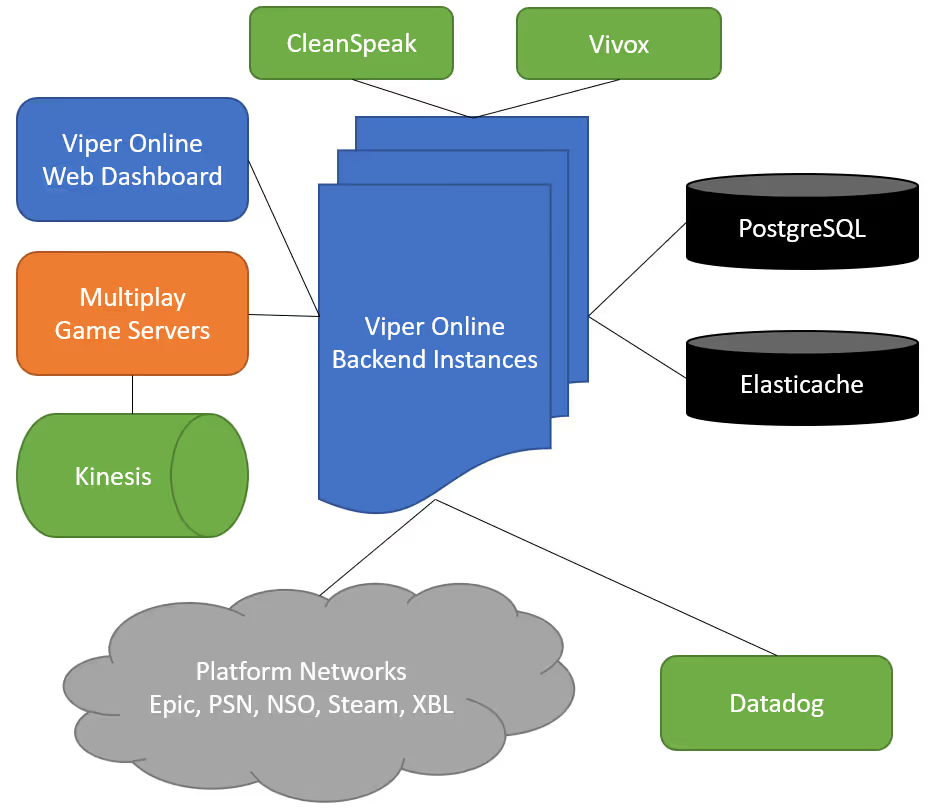

A Viper Online backend is a C program like the rest of Viper that accepts HTTP requests and websocket connections from game clients and headless servers. We currently run 32 backends in an Amazon ECS cluster of 8 machines (4 backend instances per machine).

The backend instances accept connections over which they receive and respond to requests. Requests are handled by interacting with a PostgreSQL database for persistent information, an Elasticache instance for more transient information or in order to communicate to another game client or server via another backend, or any external services over HTTP, such as to request a game server for you from Multiplay, our server provider, confirm that you successfully purchased those Holobux, or to send telemetry to Amazon Kinesis.

We also have an internal dashboard for poking switches and tunables we've exposed for ourselves and inspecting the state of the databases at a glance. This is all part of the same backend executable with the addition of a web application built with Vue. There's one other web application for inspecting recent events related to a user, primarily for diagnosing issues when they happen in the wild.

Finally, we send statistics and logs to Datadog so that we can monitor the health of all of the parts by observing graphs and receiving notifications when things meet our alert criteria.

About the Code

As we mentioned in our Introducing Viper post, these online services can be run in the same process as the game client and headless servers during development. That means we can hit "Build and Run" in a debugger and step, inspect, and hit breakpoints across all of them. It also means that, at least locally, they're never incompatible due to common reasons like forgetting or failing to re-sync, re-build, re-deploy, or re-launch one of them.

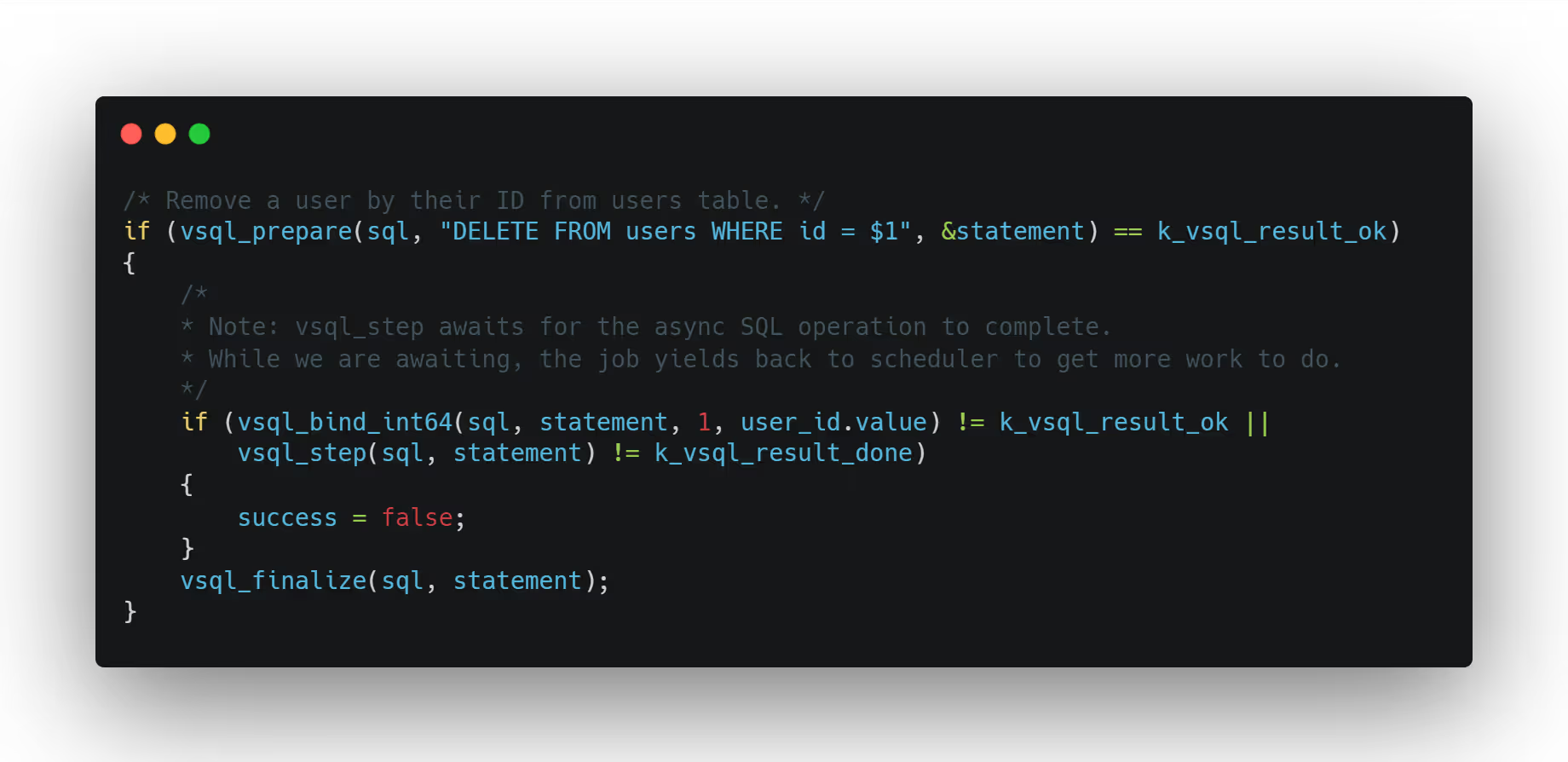

The backend spends most of its time responding to websocket messages. To handle a message, it typically creates a job in an instance of a Viper job system, the same type used by the game. Using the job system makes it possible to efficiently share resources while doing asynchronous operations, for instance relinquishing control of an operating system thread and CPU core while one job waits for a response from the database so that another job can take them over and do some computation. The end result is that we can write naively straightforward C code that makes requests to HTTP servers, PostgreSQL, and Redis in the same way and with basically the same semantics as is possible in modern languages with built-in async/await features.

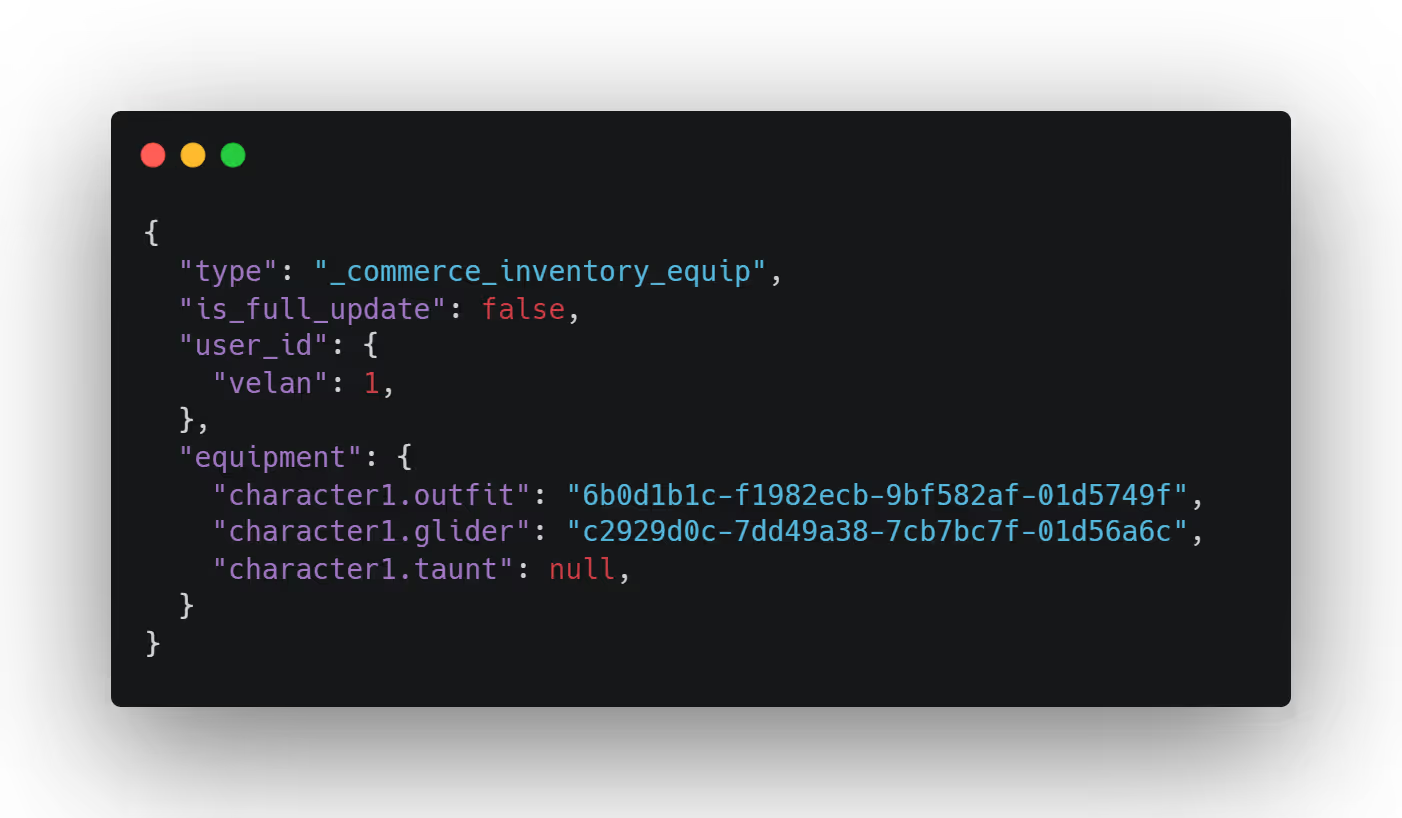

The actual websocket messages are plain old JSON like this:

We considered using more sophisticated serialization techniques for these messages to reduce bandwidth, save CPU, or enforce strict message structure, but in the end the simplicity and familiarity of generating and extracting information from JSON won. Not having to incorporate another build step or take on the added cognitive load of another description language in our codebase to use a solution like gRPC were important factors, too.

All that said, we do more typing than we'd like to move information between Postgres, Redis, and clients. Which is to say the code is too verbose, and that is an area we definitely see room to improve.

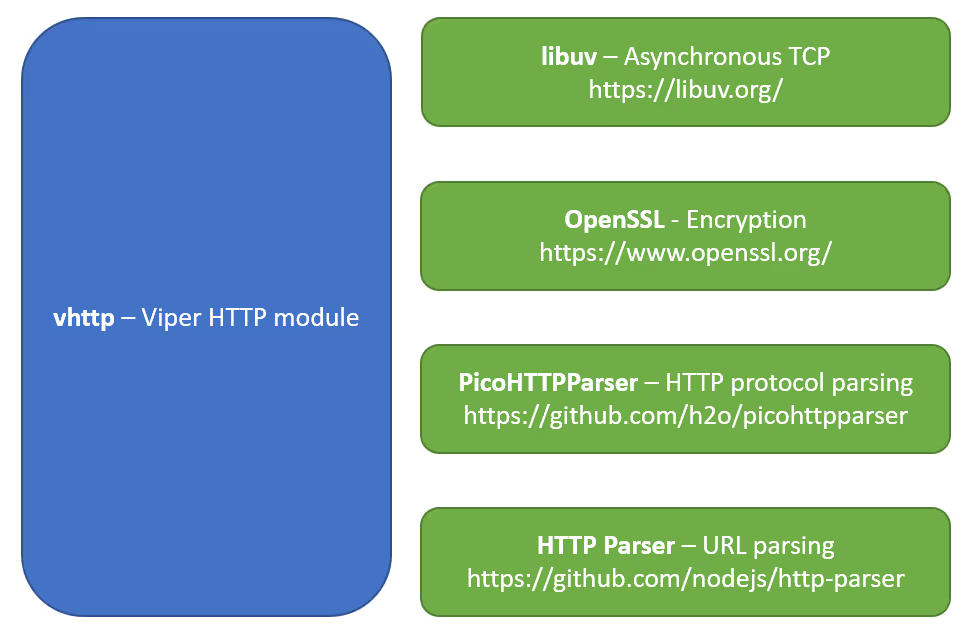

Some key parts that made all of this possible were custom HTTP client and server code and wrappers for Postgres, via libpq, and Redis, via hiredis, to bridge our job system and libuv, which we use for events.

Along the way, we had to weigh incorporating new technologies that seemed like the best tool for the job against our principles of owning our technology and eliminating barriers. One such situation was when we outgrew the sqlite database we had started our prototype with and wanted to require Postgres and Redis servers. Postgres wasn't as trivial to integrate into Viper as simply building it in like we did with sqlite, but we managed to wrangle the executables so that we can reliably launch and terminate an instance alongside the engine. Redis doesn't have a Windows build, but Memurai proved to be a compatible (enough) alternate implementation that we didn't need to resort to requiring docker or wsl on developer machines.

Implementing a Simple Feature

Many features supported by our backend are “a message handler and a database table.” What does that look like in practice? Let’s find out. Consider a feature to add a player to your list of blocked players.

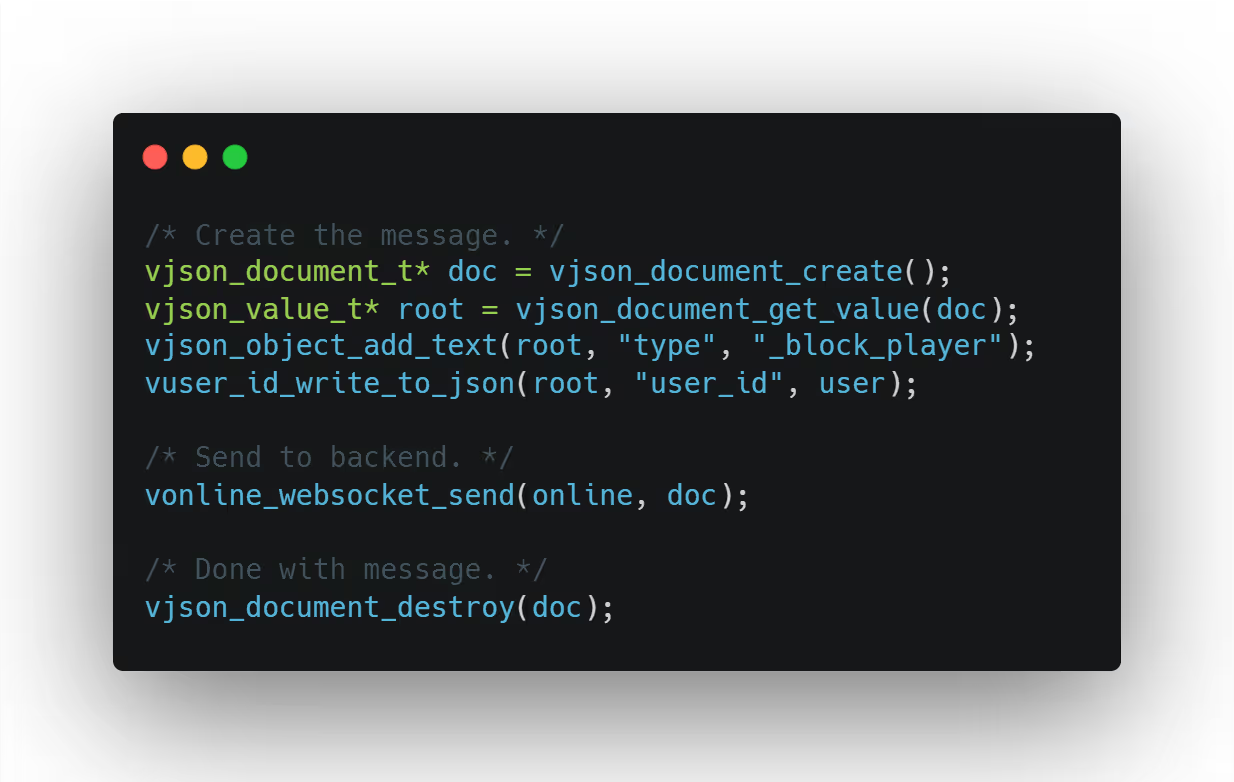

First, we need some client-side code that sends a message to the backend requesting that a player get added to the block list. Remember, all our messages are hand-rolled JSON.

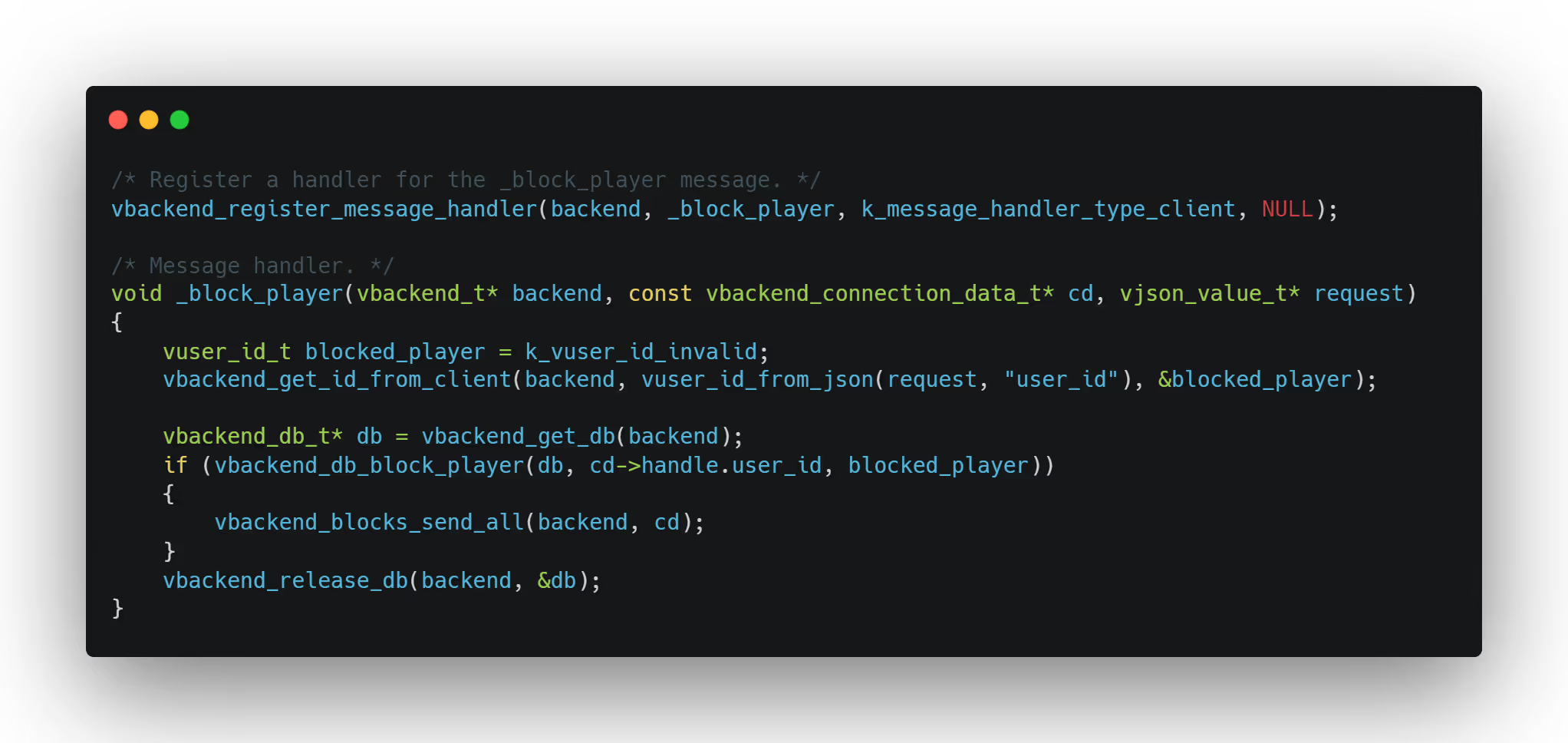

Next we need a message handler on the backend to receive this (error handling omitted for clarity).

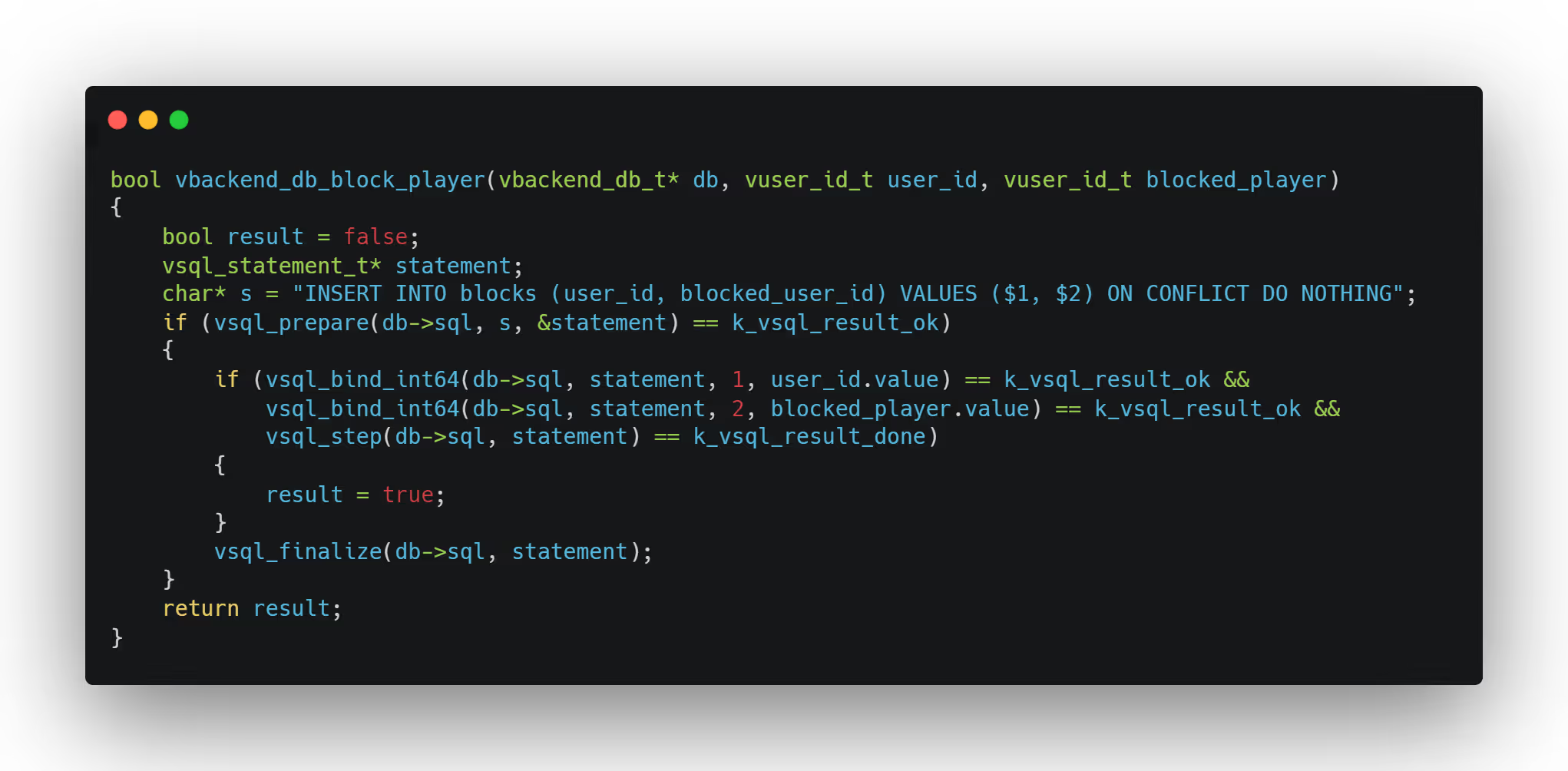

Then we need the function that adds the blocked player to the database.

Finally, in response to adding a player to your block list, the backend sends the full block list back to the client. This looks similar to the code we used to send the “add player to block list” message to the backend.

There’s one more thing, testing. Best practice on the team is that whenever we add a new message handler, we need to add both a unit test (for correctness) and something in our stress test client so that we see how the message handler performs at scale.

Testing

Of course, none of this is useful if it isn't reliable in the real world. We could have chosen to write our services in a language that provides certain types of strong guarantees, but really it isn't enough to know that we haven't made type errors or created memory leaks. We needed confidence that the whole system would operate smoothly under the load of a worldwide launch, and so throughout development we made a significant investment in running tests.

In addition to the backend services, the infrastructure, the game client, and the headless servers, we also have a stress test client which exercises the backend services like real users do without the overhead of spinning up actual game servers (though to the backend it's the same!). We can run hundreds of stress test clients, each simulating many thousands of users signing in and out and doing every possible thing that involves the backend, roughly at the same rates that real users do them.

For well over a year during development, the backend crashed, misbehaved, and stopped responding. We found numerous operating system limits and learned how to tune them. We observed the behaviors of different PostgreSQL instance types, and learned how to make our Redis queries fast enough. We fixed bugs, optimized, tweaked, rewrote, and improved. It took a lot of time, but it meant that when we finally did launch, and for the year and counting since, things have largely been smooth and stable, and in the small handful of cases where things did start to show signs of misbehaving, we were prepared to spring into action and address them. Just because it's a new, big, multithreaded, networked C program, doesn't mean it can't be reliable. Testing as well as being able to thoroughly understand what we have built have both been crucial to achieving that.

Conclusion

This concludes our tour of the Viper online backend services. There is so much more we could talk about – from individual features to architectural decisions, from mistakes made to lessons learned. Subjects for future blog posts?

If you’re interested in working on the small team that built all this, we are always hiring!